People seem to be interested in Artificial Intelligence

The first of a few retrospective posts about my time at Web Summit 2023

A short aside

Welcome to the first post of Keeping The Lights On. I've been really keen to get back into more writing and this it the first of a few pieces I have banked up from my attendance at Web Summit 2023.

Last year I was lucky enough to receive a workplace award which could be redeemed to take on a professional learning experience inclusive of flights, accommodation, etc…

I was able to attend Web Summit and Update Conference as part of this work-funded trip to Europe. Update Conference would be a treat as it focused majoritively on .NET development and Cloud, but Web Summit was definitely the pick of the two where I didn't know what to expect.

The talks and workshops were a highlight of the event and so this series of posts will highlight some of the key areas of learning and where possible I’ll aim to provide my own perspective. The conference was incredibly thought provoking and an amazing reason to be in the gorgeous city of Lisbon.

Contrary to the smart-arse title, I enjoyed the nuanced and thought-provoking discussions surrounding Artificial Intelligence in all its forms at Web Summit 2023. I am not at all an AI expert, which is why despite the bombardment of it at the event I was open to learning especially as we dabble with it more at work.

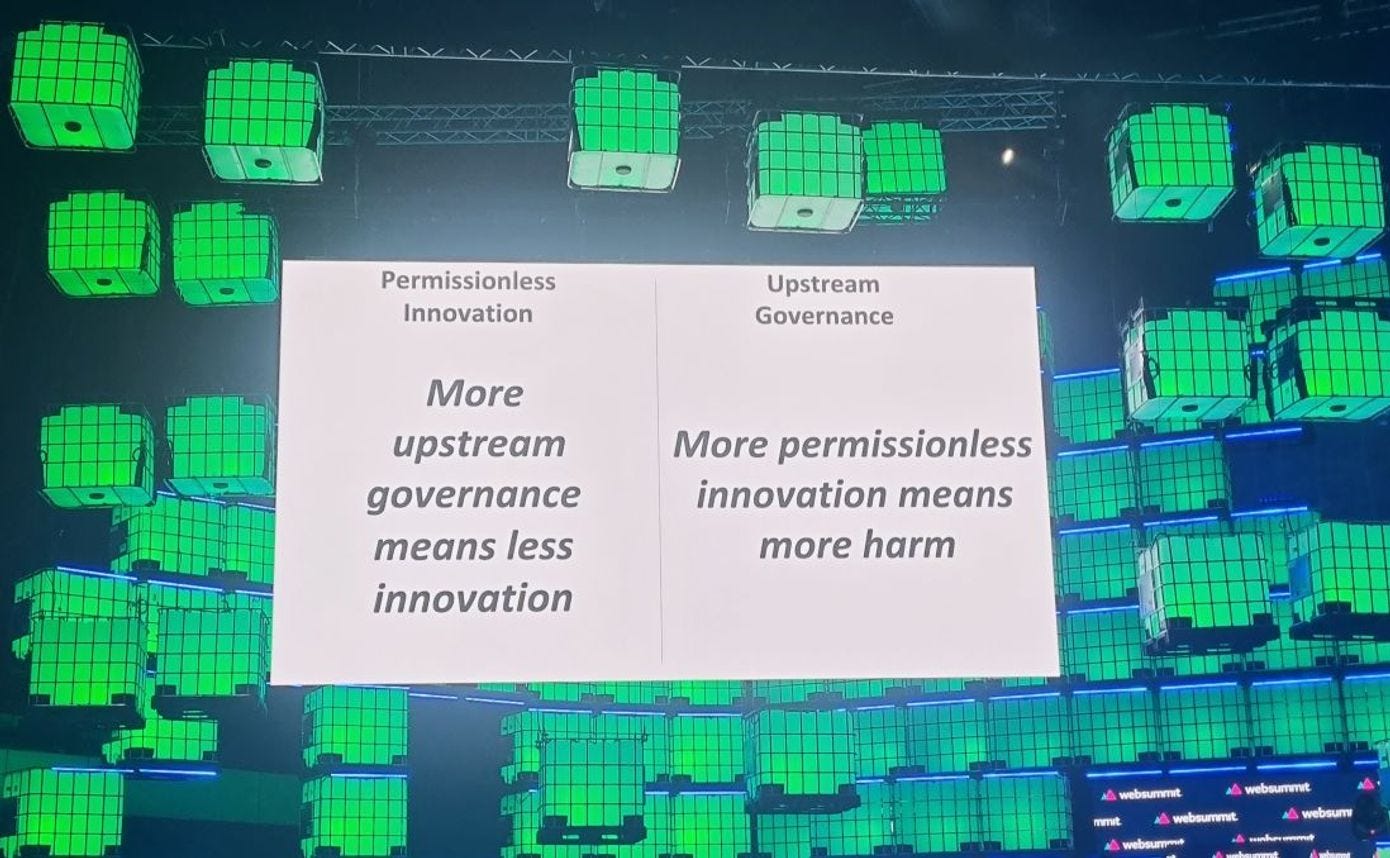

It was the definitive topic of the conference. The event began with Andrew McAfee of MIT’s “How do we regulate AI”. No matter your feelings about the technology itself, opening with a discussion about how countries around the world are approaching the governance of this tech was a captivating opening to the conference. McAfee noted the current dichotomy for AI regulation is Permissionless Innovation versus Upstream Governance - shockingly the principal research scientist siding with the former.

“Team permissionless innovation” stood for the following:

Permissionless is not the same as unregulated. We should be aiming to legislate in response to issues rather than layout the rules before we even know the potential.

Why specifically regulate AI? AI can be an aggregator of information for achieving horrible things however the information already is out there. He noted that if someone wanted to create chemical weapons currently, they don’t need GPT to do this; the ingredients for the most part are readily available and the knowledge exists in textbooks.

Innovation can come from anywhere and we shouldn’t be handicapping it. COVID-19 vaccine development as an example allowed the innovators to do their thing resulted in massive leaps in the turnaround time and delivery.

The talk was to the point, not necessarily invalidating the arguments of his opposition but definitely making a solid case for allowing permissionless innovation in the sector where possible.

Truth be told I do fall into the camp of hesitant adopters of the technology. Not necessarily due Skynet adjacent fears but more because we've seen a lot of digital snake oil the past few years (the remnants lingering around the Web 3 stands at the summit). I think part of good software development is seeing these technologies as tools to deliver a product and leaning so heavily on one technology as the silver bullet to all your problems is only going to end poorly.

Thankfully many people at Web Summit (or at least the people I cared to watch) agreed with this sentiment. I had the pleasure of watching several talks where the speakers hammered home that AI is not going to magically create a product for you and it is more of an accelerant for delivering products rather than a product in itself.

Amy Challen, Global Head of Artificial Intelligence for Shell, gave some great insights in her interview style talk about generative AI that won't cause chaos. I particularly resonated with her point of generative AI being the “icing on the cake” for a company and instead really drove the idea that digital transformation should be the prerequisite for adopting AI.

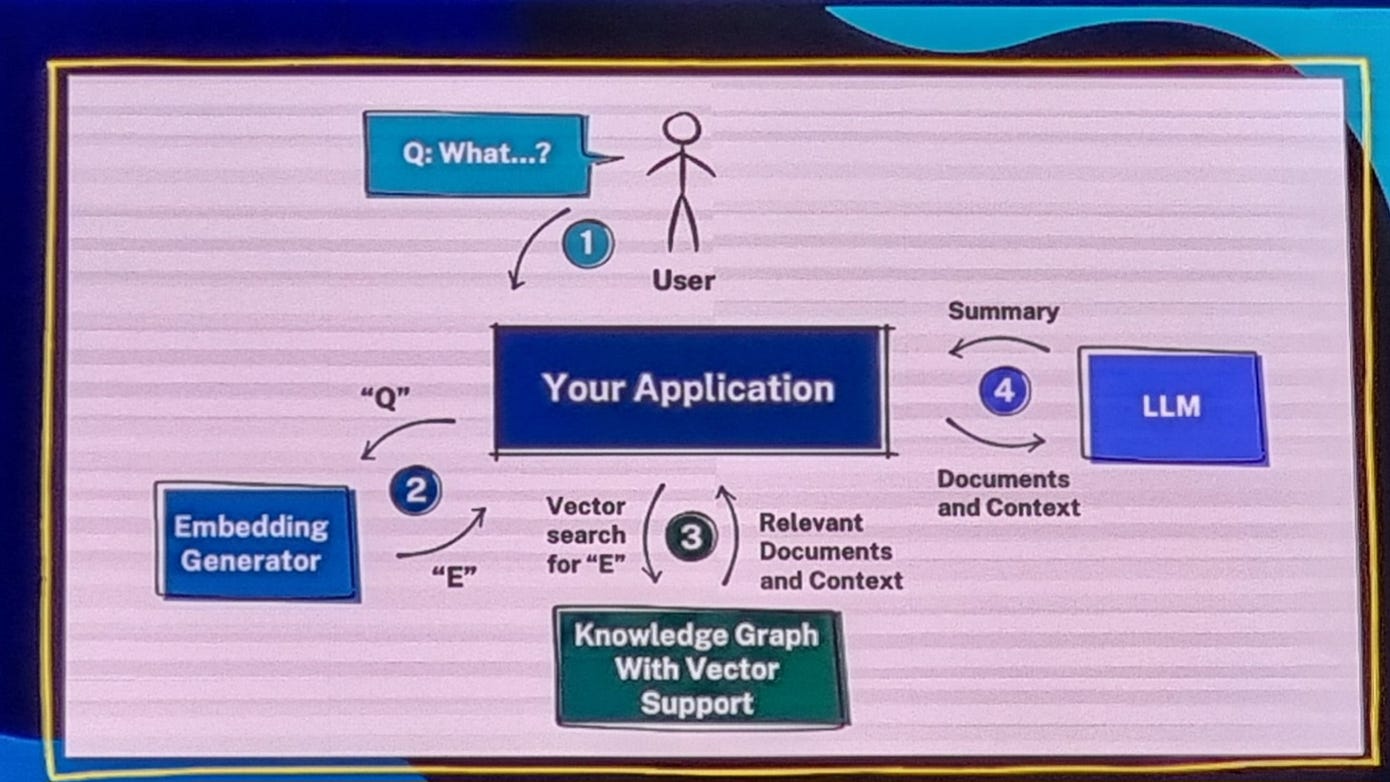

I hope that Emil Eifrem’s talk on “Solving GenAI’s hallucination problem” is eventually made available to watch online as I did appreciate how captivating and to the point it was. As a purveyor of sailor talk myself, opening a presentation with “holy shit” is a sure-fire way to get my attention. Whilst Eifrem does have a stake in advertising the Docker GenAI Stack (he owns a part of it) I appreciate the clear problem he laid out in the talk and how what is included in the stack solves it. I’d highly recommend reviewing the stack, I know I will be considering what role a Graph DB plays in future AI related work even if it’s not specifically Neo4J.

I firmly believe the final day of the summit had the highest calibre of discussions on AI’s place in the modern world. Melanie Nakagawa, Chief Sustainability Officer at Microsoft, kicked off the last day’s proceedings with how AI is being used to help sustainability efforts globally. It's always nice to hear about software based developments having an impact in the real world; particularly how AI tooling from Microsoft Research’s Project FarmVibes assists farmers get “more crop per drop”. Whilst initiatives like this aren't bulletproof (my partner, an agricultural sciences graduate, very quick to point out over Messenger that amount of wasted produce is the bigger issue not how much we can yield) they're tackling bigger issues with the technology which is a good break from hearing about all the ways ChatGPT can help write an email.

Andrew McAfee returned on the final day with a panel titled “Building Better AI”; where many of these points from the opening speech were placed side by side with recent government policies and agreements which aim to legislate on the technology. Dan Milmo from The Guardian did an excellent job asking the right questions and prodding for further answers, the whole back and forth was a fantastic watch and it's good to put these values to the test with actual real-world developments. Governments have generally been pretty poor with their measured responses to developments in tech in recent years, it's pretty easy for McAfee to say what “should” be done but Milmo’s efforts to find a middleground where legislators take some form of preventative action is commendable. I don't currently spend my free time watching MIT researcher talks but I think McAfee is someone worth following as every talk he was involved in was high quality.

Web Summit 2023 made me much less cynical about the future of AI. Those who work with me know that's a feat in itself but jokes aside the presentations widely ranged from helpful tips for integrating AI into your day-to-day and broader discussions about the technologies place in changing the world. It was positioned as another part of our tech stacks that is fantastic at what it does but requires a solid product or foundation to integrate with. Another tool in the toolbox which I have greater confidence in reaching for when delivering future work.